Plus, understand what it takes to make any A/B testing example work for your business with these handy tips and tricks.

We hear it all the time as a tenet of paid media marketing: Always be testing. But it’s only helpful to “always be testing” when your tests are sure to contribute to more successful campaigns.

I fall into the camp of “always be testing…when you have a good hypothesis.” If you see something can be improved and you have an idea for how to improve it, by all means, give it a shot. But don’t just throw things at the wall and hope something sticks. It’s important to have a thought-out approach to A/B testing so that if and when that needle moves, you know why and can test and iterate on it again and again.

In this post, I want to run through my best PPC A/B testing examples and share tips that will help you create the most meaningful and impactful A/B tests for your PPC campaigns.

❓ Not sure what you need to A/B test in your PPC accounts? Find opportunities for optimization fast with our free Google Ads Grader!

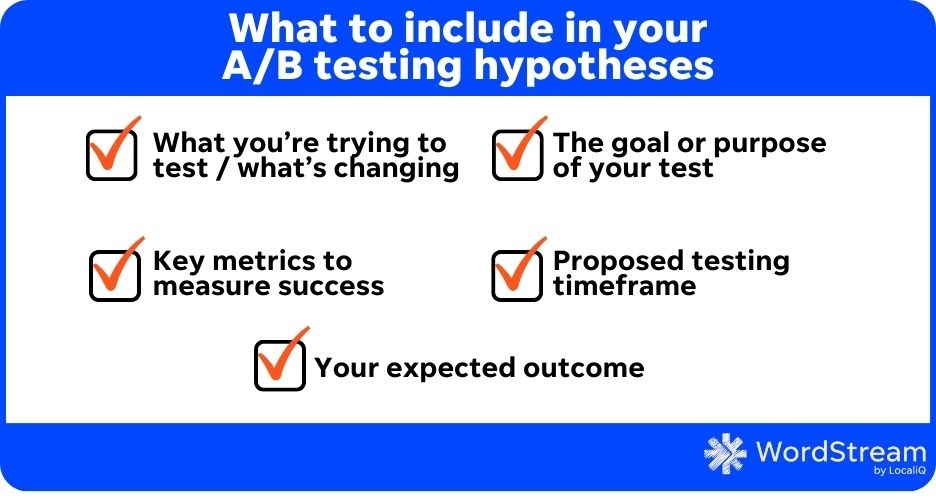

Regardless of which A/B testing example you find inspires you to do your own test, be sure to focus on your hypothesis throughout your experimentation. Here’s what I mean:

As I mentioned above, I believe you should always start any test with a hypothesis. What are you trying to test and why? But don’t just say “I think a new ad will perform better.” Try to articulate what you’re going for when running your A/B test. In my experience, when you’re more particular during the hypothesis creation, you’ll have a better test and more actionable and transferable results.

To help illustrate this point, here’s a typical A/B testing example hypothesis: “I want to test automated bidding to see if it works better.”

Sure, this might be a good test, but what does “work better” mean? A good, focused hypothesis almost always incorporates some level of detail. In the following steps, we’ll outline what the specifics are, but for this stage, think of it as a statement to the highest boss you have. They likely don’t know the nitty gritty numbers you’re looking at on a day-to-day basis, but they want to know what’s going on.

This would be a better version:

Hypothesis: “Automated bidding will help us achieve lower CPAs on our main conversion action.”

To get you started, here are some A/B test hypotheses examples for a few different experiments you could run:

Bonus reading material to help you get started developing a hypothesis: should you use the 10% or 10x Philosophy?

Now that you have your hypothesis, let’s get down to actually making this test happen.

🌱 Make a plan to grow your business in no time with our easy-to-use growth strategy template.

There are several ways you can test a hypothesis in paid media platforms, like Google Ads and Microsoft Ads. And, depending on what platform you use, there may be some A/B testing tools available to help.

There are no real “wrong” ways to test a hypothesis, but there are some pros and cons you should be aware of with each of the following PPC A/B testing examples.

This first A/B testing example is likely the easiest for most advertisers. Here you take note of the data from your existing setup, then make the changes that support your hypothesis, run the campaign that way for a while, then compare stats. Easy enough.

It may look something like this. You have four weeks’ worth of data with your evergreen ad copy. You then pause those variants and launch cost-focused copy for four weeks then compare.

This method of testing can be useful and can yield good results. It’s easy to implement and only requires you to monitor your campaign for large swings in performance.

The downside is that the variants never overlap with each other. Was there some seasonal effect that took place in the second 4 weeks? Were you short on budget for the month and needed to pull back on spending to hit your levels? Did a news story impact performance for worse (or better) during either period? Did any other aspects of the campaign change during the eight weeks the test was running?

It’s not perfect, but it can be useful to test sequentially to see results.

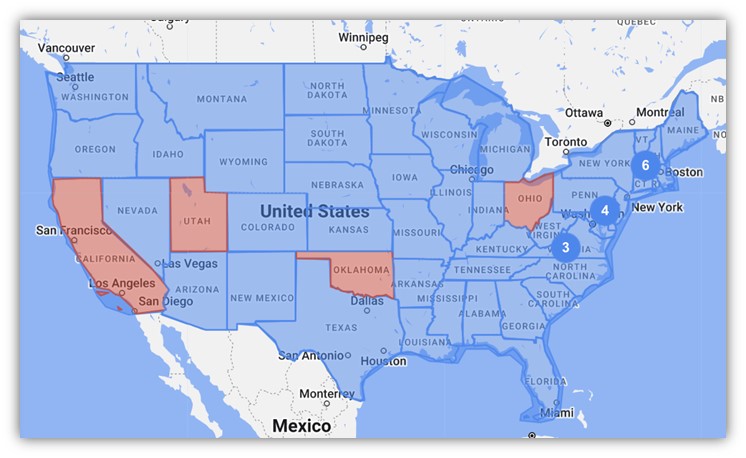

In a geolocation A/B testing example, you keep the existing campaign set up as it is, then create an experiment variant in a second location. This could be either to an expanded market or a portion of where you’re currently targeting (i.e., your campaign targets the entire United States, but for this test, you make the changes only effective in a handful of states).

To accomplish this, you need to make sure your control and experiment are mutually exclusive so there’s no overlap. This can be done by setting up new campaigns and excluding locations in your control campaign.

Unlike the sequential A/B testing example, Geolocation testing can allow you to run your variants at the same time and compare results. Any head or tailwinds you feel during the run of the test should be equal for both locations.

The downfalls come when you realize that no two regions are exactly alike. Who’s to say why a cost-focused message might work better in Oklahoma than in Nebraska? Or why the East Coast performs better with automated bidding than the Mountain time zone?

Split tests are likely the best example of A/B testing as it removes some of the cons we see in sequential and geolocation testing. The problem is that true A/B testing is also the hardest to come by.

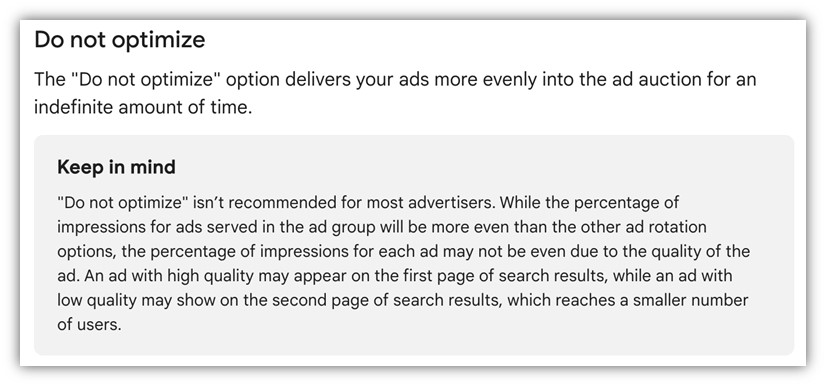

Platforms like Google Ads and Meta Ads have long done away with rotating variables evenly. For example, both platforms have AI-powered machine learning that will pretty much always favor one ad variant over another based on the desired outcome of the campaign or ad set. The same is true for bid strategies. If you’re testing manual versus auto-bidding, or one CPA target versus another, those two campaigns are likely not going to enter the auction on the same foot. One will be prioritized over the other and you’ll have an unbalanced test.

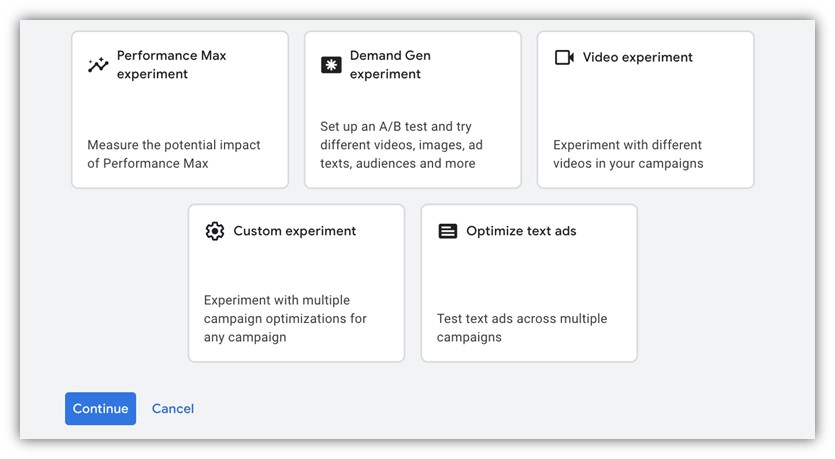

This is where experiments in Google Ads and split testing in Facebook Ads can come in.

By using these tools, you can set up tests to focus on single (or multiple) variables and give them each a fair shot in the auction.

If you’re interested in learning more about these tools, here are a couple of videos that walk you through Google Ads Experiments and Facebook Ads A/B testing.

Now that we know how we’re going to test, we need to get more specific on the PPC metrics we’re going to use to determine success. Unfortunately, I’m not willing to accept “perform better” as a good answer.

First, we have to decide what our main KPI is. Is it your Google Ads cost per lead? Conversion rate? Click-through rate? Impression share? This will rely entirely on your hypothesis and which A/B testing example you choose to implement. Pick the stat that will best reflect a success or failure for your test. (Don’t worry, this isn’t the only metric we’d focus on. More on that in a minute.)

Just like the functionality of the test, there are three common ways to approach this. Let’s say we’re trying to improve the CPA for an account. Here are some ways I could phrase my “success” metric:

All of these are valid ways of measurement. Choose the one that works best for your purposes.

📊 Are your key PPC metrics up to industry standards? Find out with our latest search ad benchmarks and new Facebook ads benchmarks!

Now let’s get into some of those other metrics I alluded to. While you might be working to optimize your cost per lead, that doesn’t mean that all other metrics are going to stay flat. In fact, I’d venture to bet that many of them will change quite a bit. It’s up to you to decide what is an acceptable level of change on other stats.

Maybe you don’t care if your click-through rate goes down 20% as long as cost per lead goes down to a profitable level. Maybe you don’t mind if you see a cost per click increase as long as revenue stays stable. But not everyone is alright with other stats moving too much.

Here’s an A/B testing example including varying metrics: I have a client who wanted to decrease the cost per lead on his branded terms by 20%, but he wasn’t willing to let impression share dip below 80%. While we knew it was going to be tricky to thread the needle, we set up an A/B testing experiment for target CPA bidding to try and lower the CPA. As we got into it, we realized that to hit our CPA metric, Google only showed the ads for about 60% of the impressions we could have had. That was a dealbreaker for him, so we turned the test off and found another way.

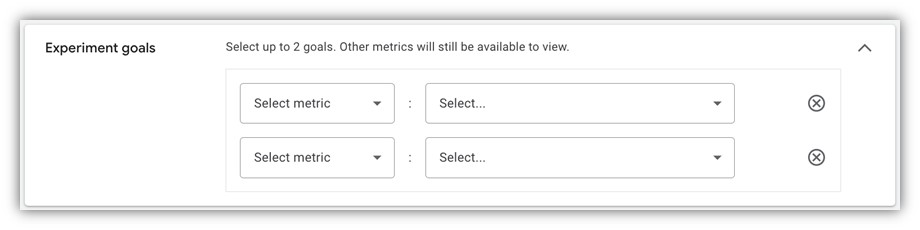

When you set up experiments in Google, they even ask for two key metrics and what you plan to have happen. You should be doing this for yourself and asking, “Are there any potential deal breakers for metrics that would require me to stop this test before it’s finished?”

Unfortunately, sometimes A/B tests have to come to an end without a clear winner. These tests can’t go on forever or else you’ll never test anything else.

But on the flip side, A/B tests need to run for long enough to make sure you have enough data to make decisions on. Only the very largest of accounts could potentially make a decision after a single week of testing, but even then, it would have to be night and day for me to be onboard.

I usually recommend a minimum of two weeks for a test to run and a maximum of two months. Anything beyond that can be unmanageable and gets into a place where other factors could be causing the test to be invalidated.

This means that, regardless of which A/B testing example you choose to run, be sure that within two months your test will have enough data to decide, with confidence, if your hypothesis was correct.

A/B testing is an invaluable tool that all marketers should likely be using in one way, shape, or form in their ad accounts. Be sure you have a clear head going into the test, including a hypothesis, plan of action, and potential deal breakers before you try any A/B testing example. That way, you’ll have set yourself up for success no matter what the outcome. If you want more A/B testing examples and ideas for your business, see how our solutions can help you maximize your A/B testing success!

Here are the top three A/B testing examples to try in your PPC accounts: